A deep dive into frequently occurring integration scenarios and the right solution approaches for these scenarios. This session will be useful for those studying for the Integration Architecture domain certification by bringing typical use-cases to life. Join us to learn about Common Salesforce Integration Scenarios and Solutions with different approaches to resolve it.

Common Salesforce Integration Scenarios and Solutions

Here are some Salesforce Integration scenarios.

- Synchronizing Accounts from Salesforce to SAP

- Credit Card payments via an API

- Passport Check via a Background Service

- Multiple Real-Time Fitness Devices

- Querying Bulk Data from Salesforce

- Image / Document upload into Salesforce

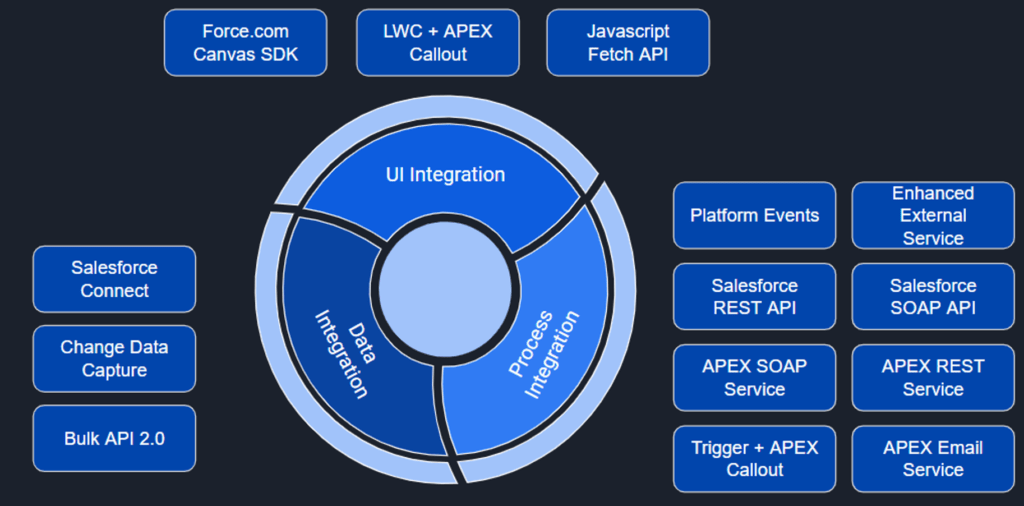

Before to this check our Salesforce Integration Guide to learn about different options for Integrations.

Salesforce Integration Wheel

Salesforce Integration Scenarios

Let see all Salesforce Integration Scenarios examples in details.

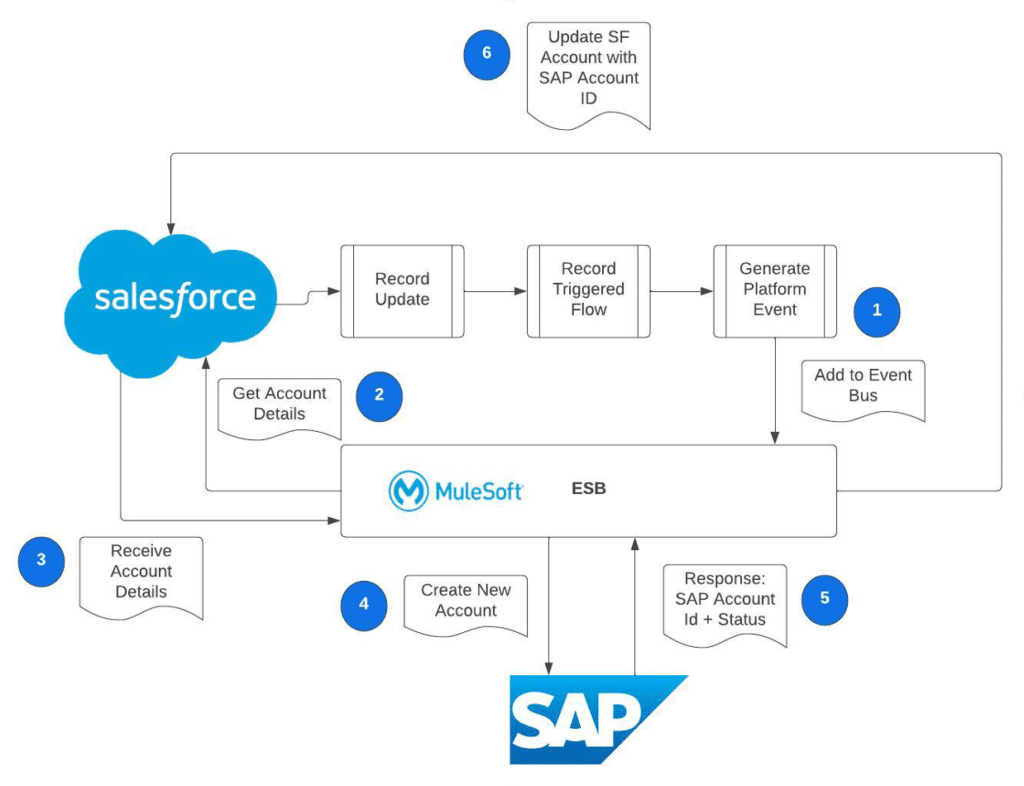

1. Synchronizing Accounts from Salesforce to SAP

A customer would like to synchronize their Accounts with SAP. When a new Account record is created or updated in Salesforce they would like the Account record to be created or update in SAP. There is an existing ESB in place. The generated customer number will then be written back to Salesforce.

The Flow

Solution

Integration Pattern Used: RPI – Fire & Forget

Integration Flow Steps

- User saves record which invokes a record triggered flow. Flow checks if an integration related field has been changed.

- Flow generates a Platform Event containing: AccountId, Timestamp and Operation [Create, Update, Delete] and publishes to Event Bus

- ESB reads Platform Event and makes a REST API call to Salesforce to get details about the Account.

- ESB make a call to SAP to create the Account.

- SAP responds with status and the SAP Customer Number

- ESB makes a REST API call to Salesforce to update the Account

- Sync Status = Success

- SAP Customer Number = XXXXXX

- Sync Error = Blank

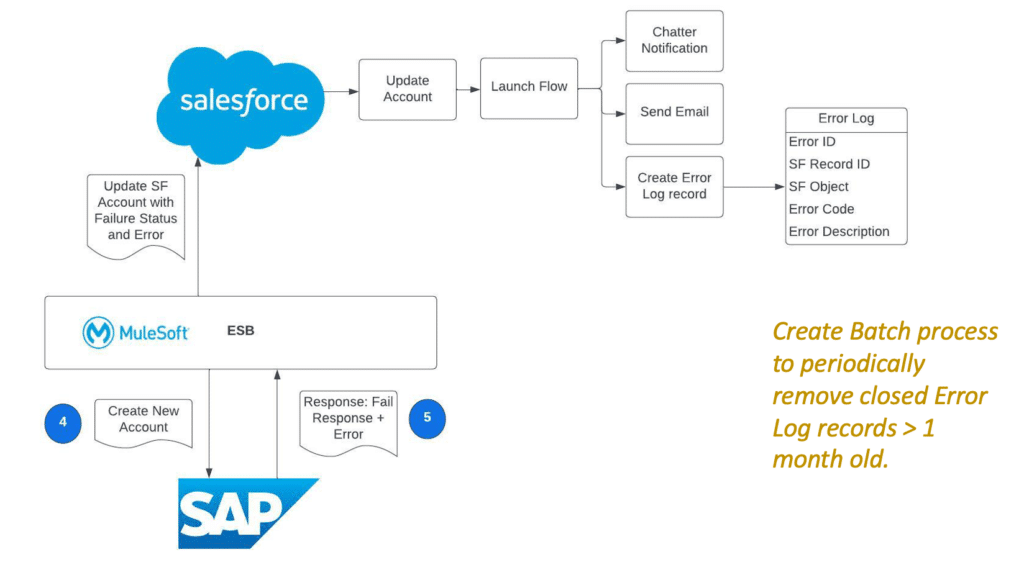

Error Handling

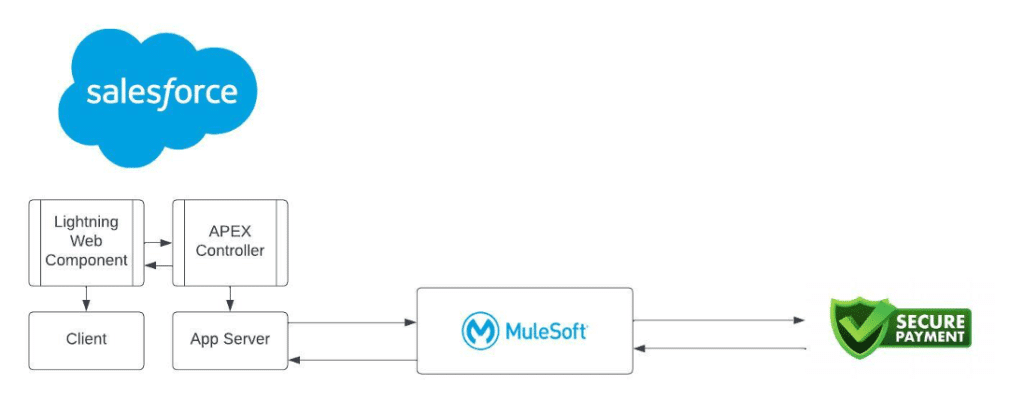

2. Credit Card payments via an API

An e-commerce application built in Community Cloud – Salesforce requires users to make a payment on an online portal. The e-commerce platform uses an online payment gateway called SecurePay. SecurePay exposes an API to perform credit card payments. Outline how you would build an integration for this use case. What would you use in Salesforce? Assume we are using an ESB in place. How will you ensure that the integration is scalable and will perform under concurrency and load.

Flow

- LWC invokes an APEX method to initiate a callout and control is returned back to the page.

- APEX method receive the request and makes a synchronous callout to the specified Mulesoft ESB Endpoint.

- Mulesoft ESB makes a call to the Secure Payment API

- Mulesoft ESB receives a response, applies any transformation and passes the response back to the calling APEX method.

The full end-to-end flow here is Synchronous.

Solution

Integration Pattern: RPI – Request Reply

- Transactions in Salesforce that take > 5 seconds to complete are classified as ‘Long Running Transactions’

- Can have a maximum of 10 Long Running Transactions at any point in time.

- Since Winter 20 all CALLOUTS are excluded from the long running request limit so Continuation Framework is no longer required.

Error Handling: The response passed back from Secure Pay is relayed back to Salesforce and a record can be logged in the Error Log object and surfaced on screen.

Integration Security

- Use Named Credentials to specify the URI of the Callout Endpoint and Authentication Parameters

- Reference the Named Credential in the APEX Class rather than hard-coding/exposing security credentials

- You can have named credentials with different values per environment to help with Integration Testing in Sandbox environments

- Create an External Credentials specifying the PROTOCOL for authentication and also associating a permission set to the External Credential.

- User must have profile or permission set associated to the External Credential to be able to make the callout

- Use Custom Headers to incorporate user details which can help streamline the service response

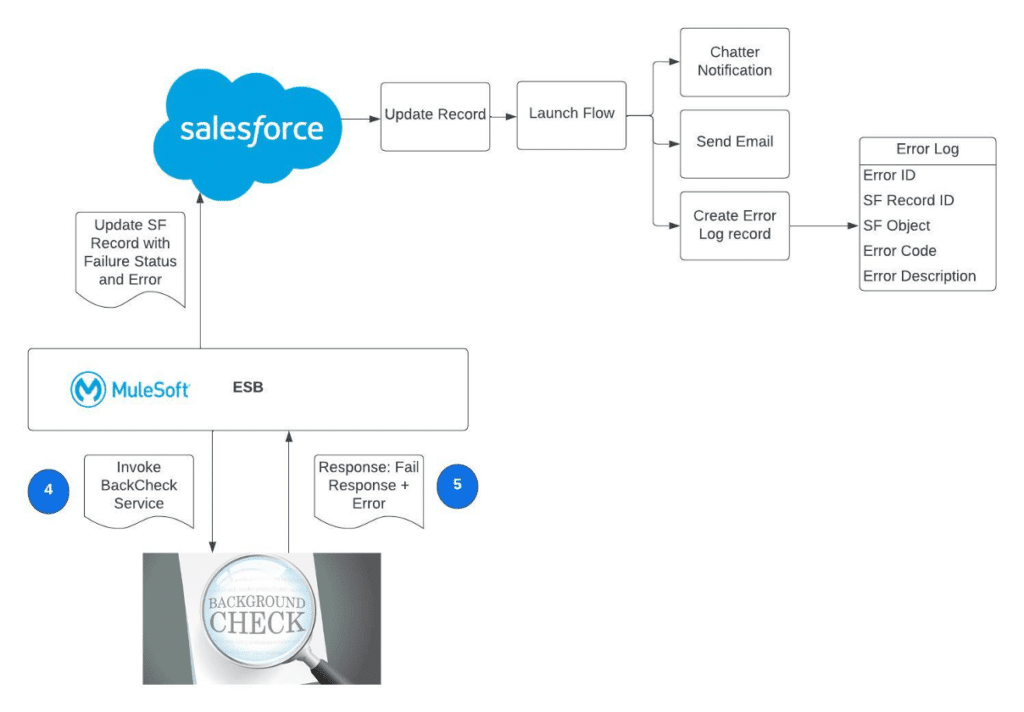

3. Passport Check via a Background Service

As part of the application process for Passports, a background check has to be conducted. BackCheck is a background checking service that takes 2-3 days to perform verification and provide a response back to the calling service. BackCheck exposes a REST API allowing for a calling system to invoke the background checking service. As part of this an image file containing a photo of the user must be sent via the REST API. When a user completes an Application in Salesforce, the background check must be automatically invoked. Once the Background check is completed, the salesforce application record must be updated with the results. Please advise on how you would build this integration.

Flow

Solution

Integration Pattern: RPI – Fire & Forget

Integration Approach: Long Running Transaction

Mulesoft will maintain a log table with details of the fact that this transaction is awaiting a response. When the response comes in from BackCheck, Mulesoft checks the log table and is able to complete the job by updating the invoking record in Salesforce. This is an example of a Long Running Transaction

Create endpoint in Mulesoft to receive response from BackCheck service.

Also need to consider Timeout scenario when no response is received after X days. Managed via Scheduled Flow on Record to move calling Record to Error State.

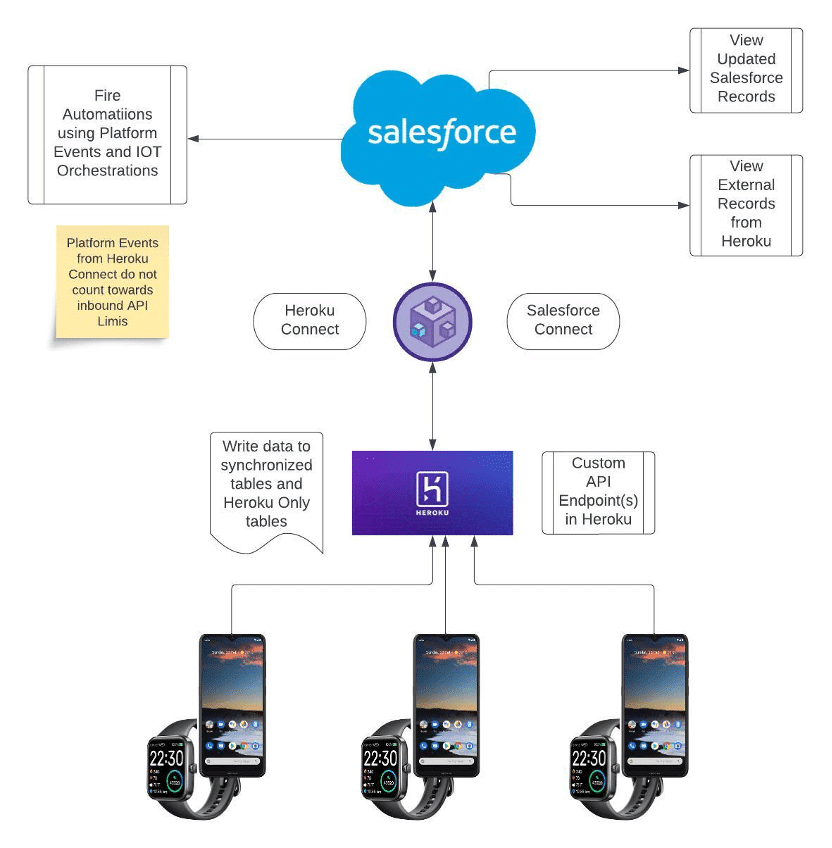

4. Multiple Real-Time Fitness Devices

In this scenario we have multiple fitness devices (smart-watches) (10M+) which send real-time updates on a person’s activity every few seconds. At any point in time, a user should be able to login to a Salesforce community and see their updated activity details. How will you build this integration. Would you have the devices send API calls directly into Salesforce, or would you consider building a back-end service off platform to receive the real-time calls? Advise how this will work?

Solution

Pattern: Data Virtualization & Remote Call In

Goal of the architecture is to ensure that we do not have high volume inbound API calls into Salesforce.

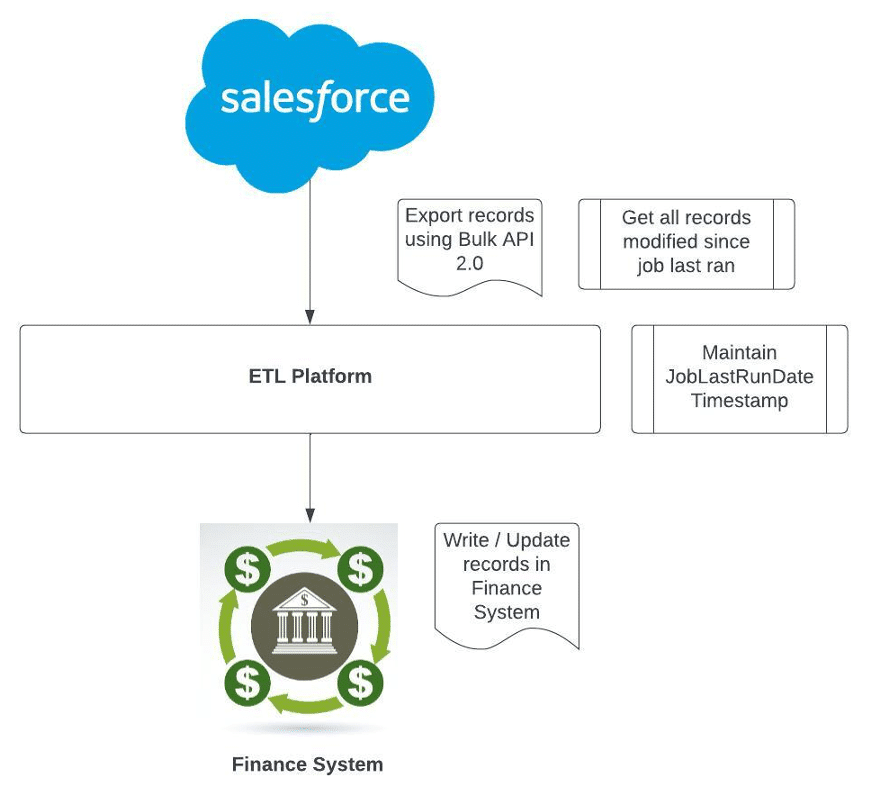

5. Querying Bulk Data from Salesforce

We want to synchronize a volume of Billing Account records from Salesforce nightly to the Finance system. How would you do this. Assume an ETL tool is presented. What APIs would you use to extract the data from Salesforce to send to the finance system. How many records would we be able to pull down from Salesforce at one go?

Flow

Bulk API Operation

Bulk API is REST based and works Async. When you make query, Bulk API decides best way to divide full query result into chunks and send back each chunk. [PK Chunking]. Avoid timeout and process chunk before next chunk arrives. Can do custom retrieval chunking as well.

- Step 1: Make Bulk API call with Query String

- Step 2: Salesforce gives response giving the Job ID

- Step 3: Salesforce starts retrieving data in the back-end.

- Step 4: Make request including jobID and # of records

- Step 5: Get records back in response and sforce-locator parameter.

- Step 6: Use sforce-locator to get records in sequence.

Bulk API Response

- Sforce-Locator parameter is provided in the response.

- If Sforce-Locator is not null this means that more records are present.

- Use Sforce-Locator in next Request call.

Solution

Integration Pattern: Batch Data Sync

Integration Approach: Bulk API 2.0

- Bulk API 2.0 query jobs enable asynchronous processing of SOQL queries.

- Bulk API 2.0 query jobs automatically determine the best way to divide your query job into smaller chunks to avoid failures or timeouts.

- Automatically handles retries.

- If you receive a message that the API retried more than 15 times, apply a filter criteria and try again.

- Response body to query job is compressed.

- CSV is a supported response type

PK Chunking Explained

- PK chunking splits query based on record ID

- Each chunk is processed as separate batch

- Chunk size default = 100,000

- Chunk size max = 250,000

- Enable PK chunking when querying tables with more than 10 million records or when a bulk query consistently times out.

- Use JobID and BatchID to retrieve a specific batch.

- Effectiveness of PK chunking depends on the specifics of the query and the queried data.

Bulk API 2.0 doesn’t support SOQL queries that include any of these items:

- GROUP BY, LIMIT, ORDER BY, OFFSET, or TYPEOF clauses.

- Don’t use ORDER BY or LIMIT, as they disable PKChunking for the query. With PKChunking disabled, the query takes longer to execute

- Aggregate Functions such as COUNT().

- Date functions in GROUP BY clauses. (Date functions in WHERE clauses are supported.)

- Compound address fields or compound geolocation fields. (Instead, query the individual components of compound fields.)

- Parent-to-child relationship queries. (Child-to-parent relationship queries are supported.)

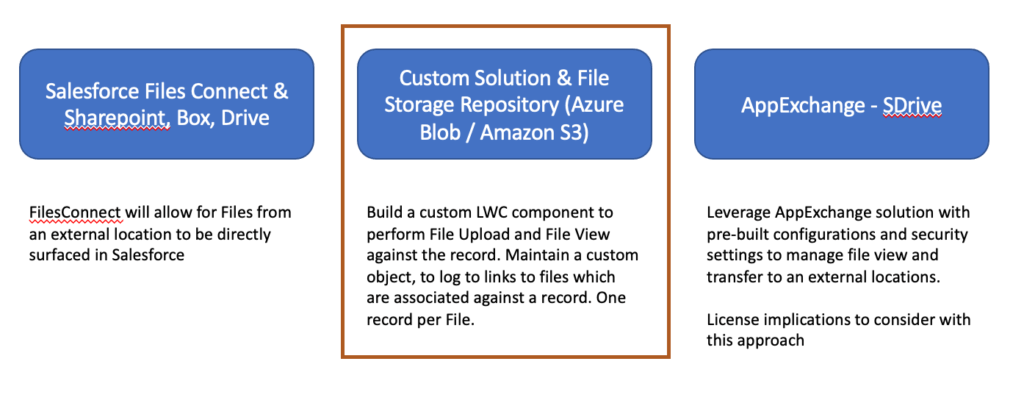

6. Image / Document upload into Salesforce

An estate agent creates Property records in Salesforce. Against each property there is a need to upload property images – average 20. Each image can be 10MB in size. There are 500,000 properties that need to be migrated onto Salesforce. What is your solution for storing the images and making sure they are accessible against the record.

Solution

Storage Calculation: 500,000 * 20 * 10 = 97,656 GB.

Integration Principles

having salesforce vlocity integration is very rare and agree to having different approach from customer so we setup accordingly