Learn the techniques, patterns and strategies when it comes to handling data archiving, backup and restore in Salesforce. Data Archiving in Salesforce has proved to be one of the most adopted approaches when it comes to managing data growth & optimizing storage usage. According to the recent Salesforce Data Archiving Trends, companies are actively looking for enterprise-level data archivers to keep their historical data.

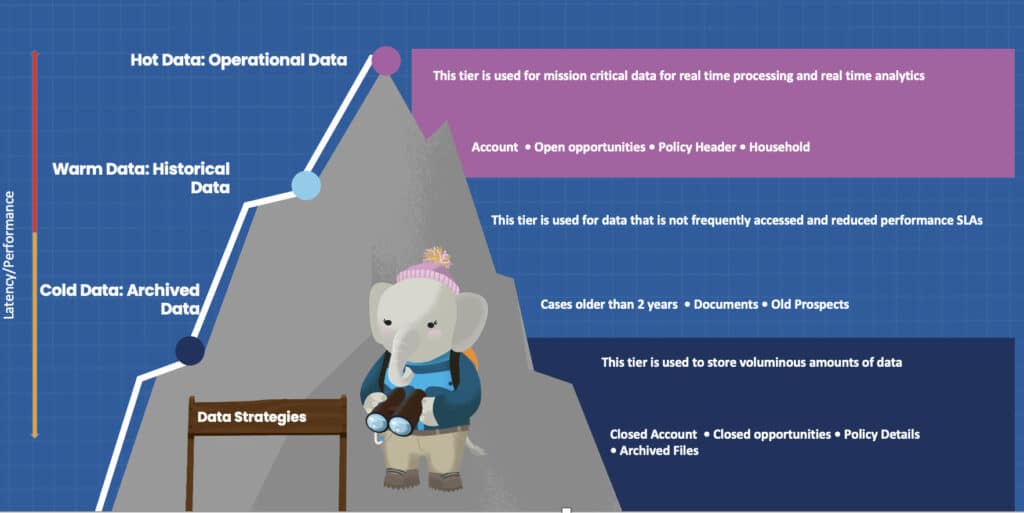

Introduction to Data Tiering

Data tiering is the process whereby data is shifted from one storage tier to another. It Allows an organization to ensure that the appropriate data resides on the appropriate storage technology in order to

- Reduce Costs

- Optimize Performance

- Reduce Latency

- Allow recovery

As more and more companies shift digital in the post covid era, we expect the demand for data being stored digitally to significantly increase. Hence the need for appropriate storage and archival technologies. If you want to learn about Building Scalable Solutions on Salesforce please check this post.

Data Tiering Pyramid

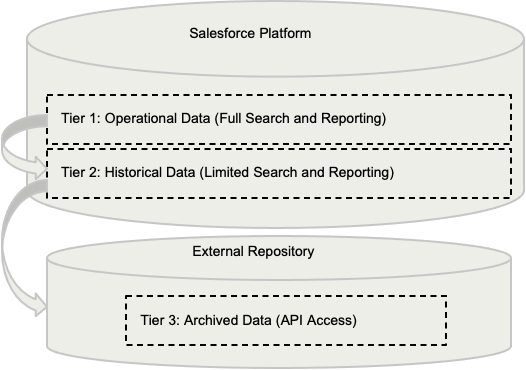

Data Archiving and Backup Options & Architecture

Salesforce data archiving strategies present in the market today

ON Salesforce Platform : Let see how many pattern are available for data archiving and backup in Salesforce on platform.

- Pattern 1: Custom Storage Objects

- Pattern 2: Salesforce Big Object

OFF Salesforce Platform : Let see how many pattern are available for data archiving and backup in Salesforce on platform.

- Pattern 3: On Prem DataStore

- Pattern 4: 3rd Party Vendor product

Archiving On Platform

Let see how many pattern are available in Salesforce for on platform data Archiving.

Pattern 1: Salesforce Standard/Custom Objects

In today’s era of Big Data, any business’s future existence & growth largely depend on its strategy of collecting, storing, retaining, accessing, and managing the business data. Let see how we can use pattern 1.

Record Archive Indicator

Archive indicator flag added to Object. SOQL, batch jobs, Reports & Dashboards enhanced to use archive flag included in selection criteria.

- Benefits

- Data in Salesforce & can be part of

- Salesforce Reports/Dashboards

- Low Complexity and Highly Flexible

- Platform data security

- Drawbacks

- Storage Usage and Cost issue remains

- Cannot be used for non-customizable objects

- Requires changes to Salesforce solution – Queries, Reports, etc

- Performance on large data volume would be still a challenge

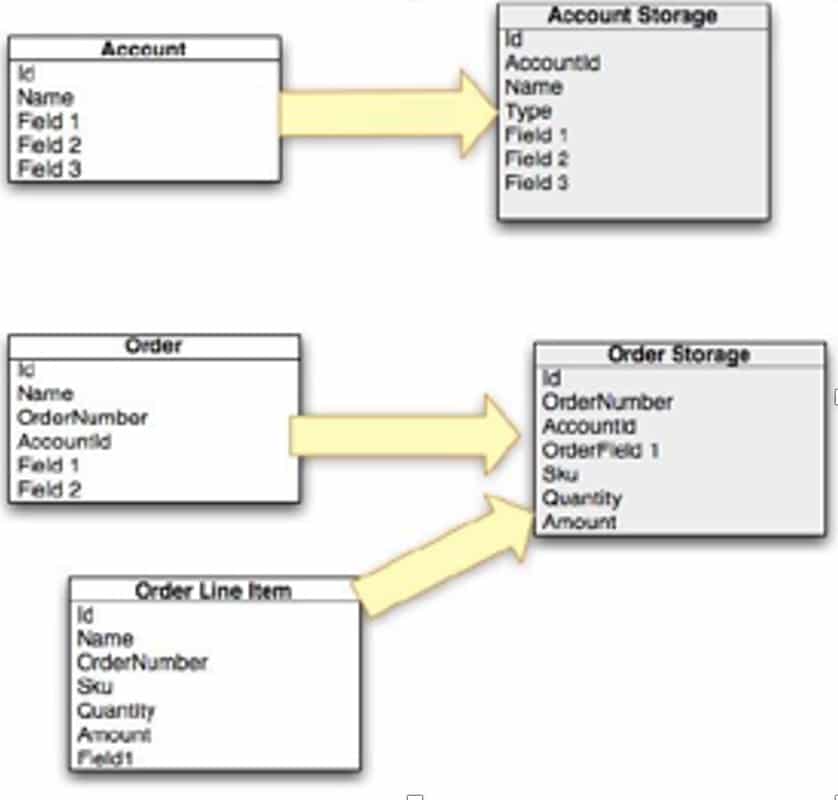

Custom Storage Objects

Create Custom Storage Objects to store Archived Records Move Archival Records to Storage objects.

- Benefits

- Data in Salesforce & can be part of

- Salesforce Reports/Dashboards

- No changes to existing Salesforce Artifacts (SOQL, Reports)

- Applicable to any Salesforce Objects including System Objects

- Platform data security

- Drawbacks

- Storage Usage and Cost issue remains

- Custom Solution required to move data to Storage Object (could leverage batches)

Pattern 2: Salesforce Big Objects and Async SOQL.

Big Objects are delivered through the Lightning Platform with known tools like Apex and SOQL, to store, manage, query, and view millions to billions of records without the need for complex external systems and integrations.

Store and control massive amounts of data on the Salesforce Platform. Capture and manage data with the Salesforce Platform tools you love. Run queries & compute ops designed for consistent performance at scale.

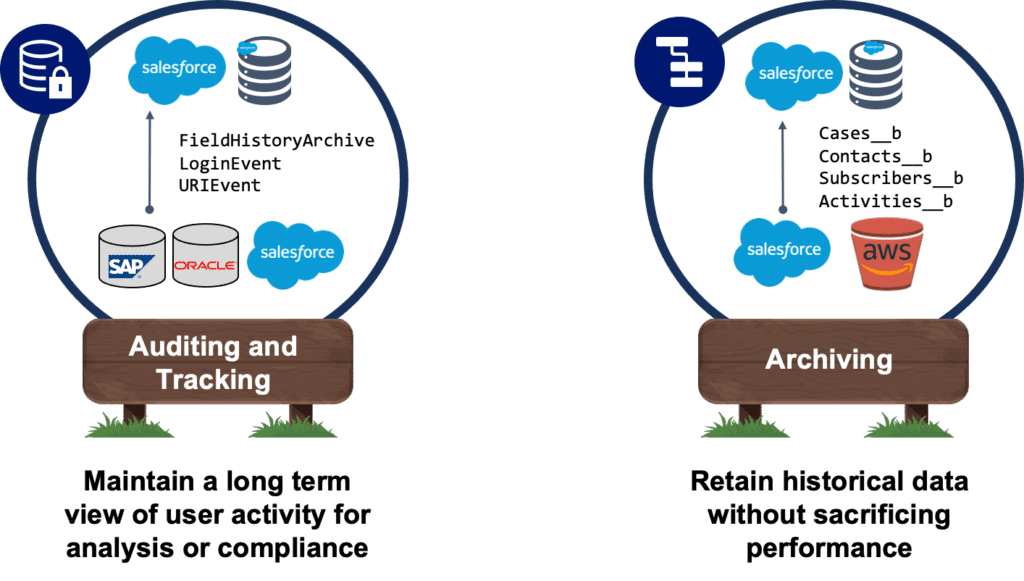

Here are the key use case we see for our customers with big objects.

- Bing your big data to Salesforce for custom Lightning apps with all of your data. This means leveraging big objects to store data from multiple applications, not just Salesforce, and then being able to create custom applications on the Salesforce Platform using that big data. Maybe for a 360 degree view of the customer or an operational risk management application.

- Auditing and tracking – so a longer term view of user activity or history for compliance reasons, for example. Again, could mean both Salesforce and non-Salesforce data is stored in Big Objects.

- Then standard archiving – That is really just about keeping that historical data archive – moving data off the standard Salesforce platform into cold storage where it is available if needed.

Benefits

- Consistent Scale and Performance, on any volume of Salesforce data be it millions or billions of records

- Native Salesforce Data Lake On platform – stored in security Salesforce data center – compliant with no need to offload data to 3rd party or manage/integrate data lake

- Can be used in context of existing Salesforce apps and CRM with familiar platform syntax and tooling.

- Declarative set up or via Metadata API

- with Async SOQL allows you to perform massive operations across your data

- Einstein Analytics Connector

- Powers many internal products like Shield, Pardot, Chatbots under same technology with exciting roadmap with Platform Events integration etc.. 10x cost savings drive innovation across business.

Challenges

- Standard Report and Dashboards is available on aggregate custom objects using Async SOQL.

- Global search doesn’t support big data

- Big Objects do not have regular IDs instead they have primary key fields that are used to query them

- Big data doesn’t support sharing but can be achieved through parent object lookup relationship

- Big Objects are not transactional – workflow/process requirements

- Plan how you will use the Big Object’s primary keys/index before Big Objects support multiple indexes

Archiving OFF Salesforce Platform

let see which all option available for off Salesforce platform solutions.

Pattern 3: Data Replicate as a Data Lake and delete them from Salesforce

The system of records is Salesforce, but once the record is not needed anymore by the Business, it will be moved out from Salesforce and injected into an external application such as a Data Lake.

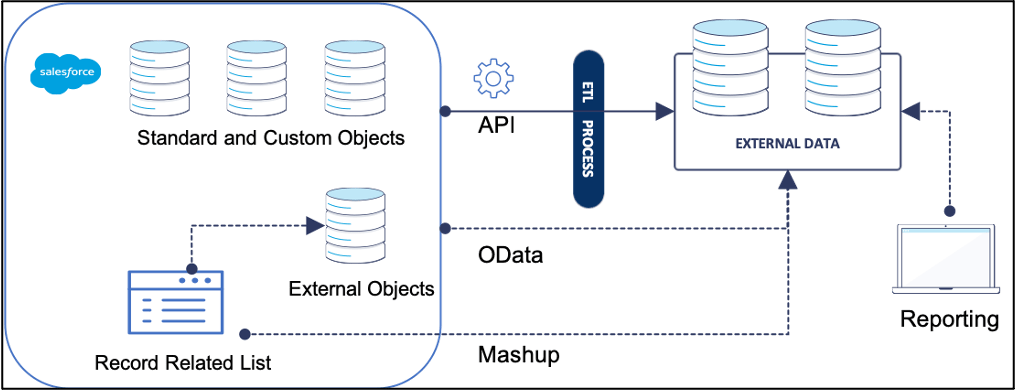

Following an example of how the architecture might look like, but it depends on business requirements defined as part of the Data Archiving.

- ETL Processes such as Informatica or TalenD, in charge of exporting data from Salesforce into the external application, checking metadata change or restoring data if needed.

- Page Layout/Related list/Search on Salesforce to give the possibility to the users see the archived data, using External Objects (with OData) or via Mashup Call-out

- Record visibility and Reporting on the external application

Benefits & Challenges

Using a data archiving solution OFF Salesforce.

Benefits

- Leverages existing investments in internal applications or BI tools for reporting

- Reduces Salesforce storage utilization Can be aligned with Enterprise Archive Strategy

- Archive Repository Data model can be customized

- Archive data accessible via Lightning Connect, callout or mash-up

Challenges

- Solution Complexity and Cost are High

- Needs to implement ETL to move records out of Salesforce

- Significant development, setup and operations effort

- Requires data security controls to be built in

- Keep metadata object structure synchronized

- Performance (External system has to guarantee speed at scale needed by the use case)

Selection Matrix – Evaluating the Data Archiving Solutions

| Area | Custom Object | Big Object | External Repository(*) (including appexchange) |

| Infrastructure | Salesforce | Salesforce | External Solution |

| Integration | No | No | Yes |

| Cost (dev, maintenance) | Low | Low/Medium | Medium/High |

| Cost Storage | High | Medium | Medium/Low |

| Amount of Data | Millions | Billions | Any (consider API Integration Limits) |

| Data Retry | Declarative/Programmatic | Declarative/Programmatic | API |

| Data Security | Provided by Salesforce | Provided by Salesforce | Provided by External Solution |

| Reporting | Full reporting | Standard Report limited** (Extend with EA) | Full reporting (subject to volume) |

| Data Retention | Low | High | High |

| Skillset | Salesforce | Salesforce | Salesforce, Integration, Others |

| Technology | Consolidated | Emergent | Consolidated |

| Good fit for | Small data volume | Large data volume | Leverages existing investment |

Data Archiving Strategy and Backup in Salesforce Video

Learn more about Salesforce Data Management Strategy.

Difference Between Archives and Backups

An archive includes historical, rarely-used Salesforce data located out of production. Upon archiving, the information moves to for future use. Archiving is about selecting subsets of data from production environments to move to external, long-term storage.

You can use your data backup as a copy of your entire Salesforce production/sandbox environment to quickly restore in the event of accidental data loss or corruption caused by human error, bad code, or malicious intent.

AppExchange tools for Backups & restore

Here is list of AppExchange tools available for Backups & restore

- OwnBackup

- CopyStorm

- Spanning

- CloudAlly

- Odaseva

- Backupify

- GRAX (Heroku +HardingPoint)

- GRAX Comments

- Cervello (Heroku-based solution)

- DataArchiva

- DBAmp

- Sesame Software

- sfApex

- Skyvia

- AutoRabit Vault

- Commvault

- AvePoint

Hi, thanks for sharing a great article. There is one more tool that is very reliable for Salesforce data backup. This tool is Archive On Cloud.

Thanks for sharing with us.

Thanks sir for sharing valuable blog, because I have need this thing.

Glad you like our Salesforce Backup and recovery post. Thanks for your feedback