Few week before we did one session with Lars Malmqvist on “Architecting with AI – The New Frontier for Salesforce Architects”. We talk about Embrace the new reality of AI and understand its transformative impact on Salesforce architecture. Will explore the probabilistic, model-based, and data-dependent nature of AI solutions.

We discussed the autonomous and evolving characteristics of AI that set them apart from traditional systems and highlight the potential ethical implications of AI-centric designs. A special focus will be given to Large Language Models (LLMs) and their transformative role in Salesforce ecosystem. Delve into the cautionary tales of unanticipated AI behavior and learn to counteract such instances with adequate supervision and monitoring. This talk is essential for all Salesforce architects seeking to leverage AI for improved business outcomes.

What distinguishes AI architecture

Let see What distinguishes Architecting with AI.

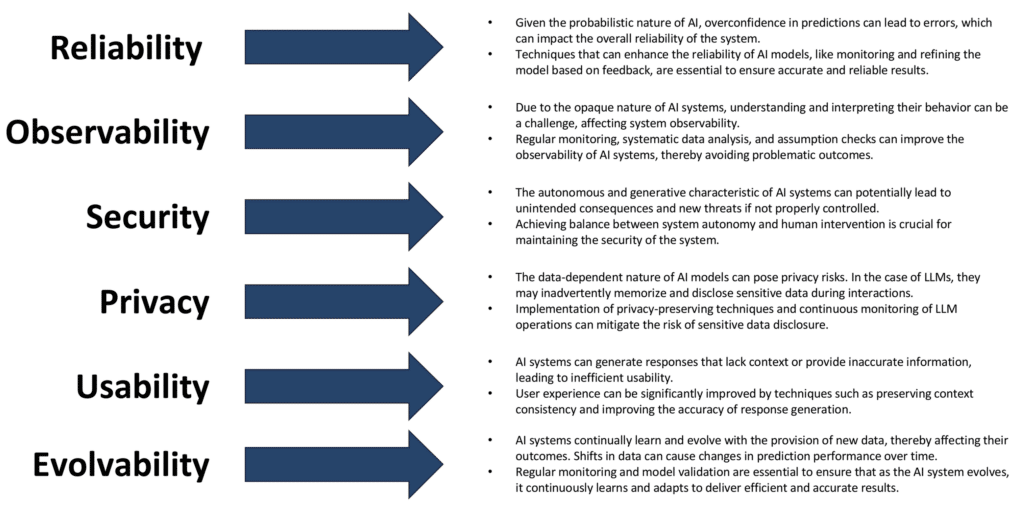

Probabilistic

AI solutions are inherently probabilistic, meaning they predict possibilities rather than certainties. This trait can lead to errors in one-off events but proves significantly useful in predicting outcomes of repeatable events. A cautious approach is necessary when designing AI systems to avoid failure due to overconfidence in predictions.

Model-based

Traditional solutions use prescriptive code while AI solutions use models to solve problems. Capturing all process complexities in AI models is neither possible nor beneficial. AI architects must strike a balance between model size and detail required to create an efficient AI system without over-reliance on excessive detail.

Data-dependent

The quality and quantity of data determine the prediction accuracy in AI systems. High-quality, plentiful data can significantly improve AI capabilities, such as recommendation systems. Although enhanced algorithms contribute to AI solutions’ efficiency, the impact of more data outweighs it.

Autonomous

Greater autonomy given to AI systems can potentially result in unintended consequences. As AI systems grow more complex, problems could escalate and may require human intervention. It is crucial to have appropriate monitoring and humans involved in processes to avoid significant adverse impacts.

Opaque

AI systems can be hard to interpret and understand, thus leading to problematic outcomes. Debugging of AI systems is not straightforward as in traditional systems, making model evaluation crucial. Systematic data analysis, assumption checks, and careful implementation can mitigate the opacity problem.

Evolving

AI systems continually learn and evolve from the data provided. Shifts in data can cause changes in prediction performance over time. Regular monitoring and validating models are essential as the AI progressively learns and adapts.

Ethically valent

AI systems can reflect inherent data biases, leading to ethical issues. These biases can cause systems to make insensitive or inappropriate associations. Adhering to responsible use principles.

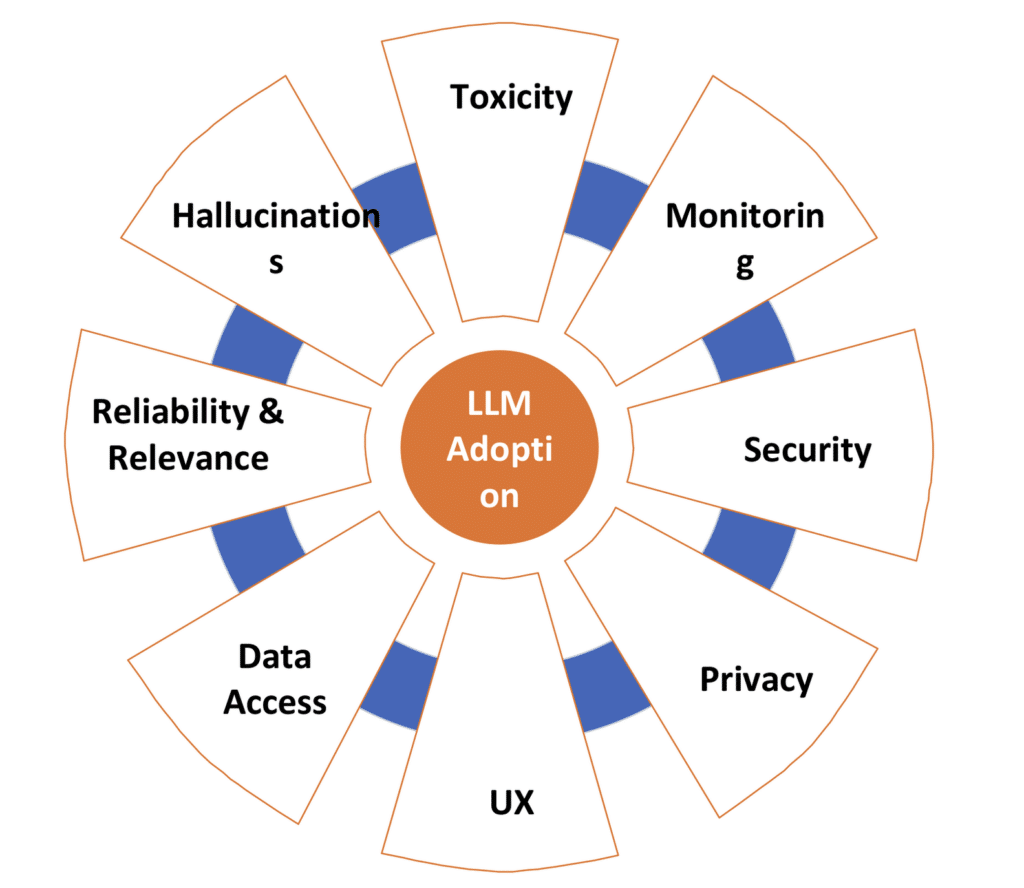

Challenges with LLMs

Toxicity

LLMs can inadvertently produce outputs that foster stereotypes, threaten minority groups or encourage harmful behavior. The challenge necessitates the development of mechanisms for detecting and mitigating these biases and ensuring compliance with organizational and societal standards.

Hallucinations

Hallucinations refers to instances where the model generates plausible but made up information which can be misleading or lead to memory distortion in responses.

This issue is amplified by the ever changing real-world data, the proprietary datasets’ unavailability, and challenges in scaling solutions that leverage external knowledge for grounded responses.

Reliability & Relevance

Responses generated by LLMs may not be accurate, reliable or may lack context and relevance, especially when insufficient information is provided in the prompt.

This emphasises the need for cautious implementation of LLMs, where critical decisions and operations are involved.

Data Access

Enterprises often store extensive range of structured data which LLMs, designed for unstructured data, find difficult to comprehend and reason effectively over.

The challenge of managing and providing access to structured data necessitates innovative solutions, including interface augmentation and data linearization.

UX

LLMs’ inability to maintain context consistency and provide accurate information potentially disrupt user experience, leading to confusion and mistrust.

The added challenge of integrating LLMs into existing enterprise systems and

workflows raises further user experience challenges.

Privacy

The inherent ability of LLMs to remember sensitive data raises concerns about inadvertent disclosure of such information during interactions.

Due to this, implementing privacy-preserving techniques and continuously monitoring LLM operations are necessary for enterprise LLM architectures.

Security

LLMs are susceptible to adversarial attacks, posing significant security risks. In addition, the use of LLMs may not comply with upcoming regulatory requirements. This raises the necessity to develop mitigation strategies to ensure secure and compliant use of LLMs in enterprise systems.

Monitoring

The complexity of operationalizing LLMs into business processes and ensuring their compliance with business standards can lead to failures.

The need for robust automation and an efficient monitoring system to detect any deviations in LLM performance is crucial.

How this affects architecture practice

Summary

Thanks Lars Malmqvist for such a great session on Architecting with AI.